We have seen a few projects where the client insists on using SQL Server, but their workload generates a bunch of semi-structured JSON data for us to store. Now we need to bridge the gap and efficiently query it. One realistic case study where this situation may pop up would be building a browser extension that helps capture and organise wish lists from various platforms such as Amazon or eBay.

Let’s build a wish list

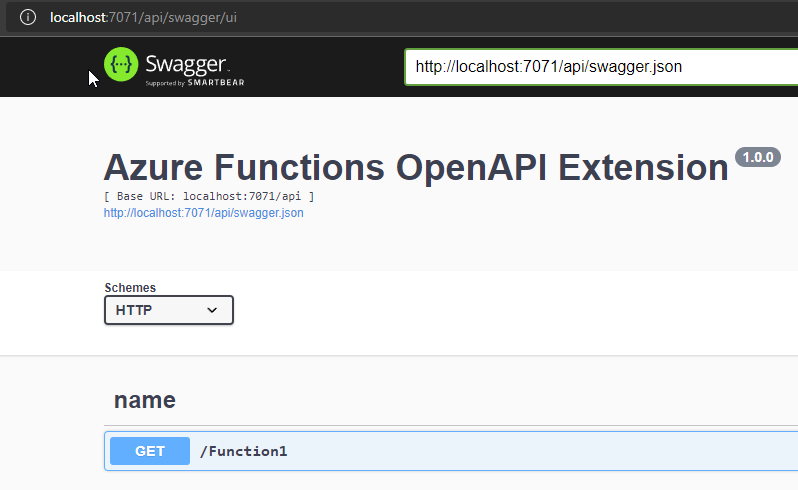

Our journey would likely start with a web browser. We may have a browser extension of some sort that would send information about an item we’re currently watching to an API. That API will have to make sense of all different attributes each website provides and try store it in a way that makes querying as easy and efficient as possible.

The catch is, we don’t know full list of attributes. And, probably, we should not care – perfect data model for frequently changing e-commerce websites would be hard to deduce and likely very painful to maintain. We could just lump it all together and chuck it into a schema-less data store like Cosmos DB or Mongo, but for the sake of argument we’ll imagine the client was adamant they needed a SQL Server because it’s a business decision they made a long time ago.

Not a huge drama

SQL Server has functions to work with JSON data – all we have to do is put it into a sufficiently big column. We can save heaps of time creating one simple data model and calling it a day.

Moving on to coding, let us focus on data retrieval and skip the API part for now – it’s a whole different can or worms and will distract us from our point. From here on we’ll be exploring ways to query the data

Test Bench

We’ve created a small EF Core project where DBContext looks something like this:

class SHDbContext : DbContext

{

public DbSet<WishlistEntry> WishlistEntries { get; set; }

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

{

base.OnConfiguring(optionsBuilder);

optionsBuilder.UseSqlServer(Environment.GetEnvironmentVariable("DB_CONNSTR")).LogTo(Console.WriteLine);

}

}where WishlistEntry is defined like so:

class WishlistEntry

{

public int Id { get; set; }

public int CustomerId { get; set; }

[MaxLength(300)]

public string ItemSource { get; set; }

public string ItemData { get; set; }

[DatabaseGenerated(DatabaseGeneratedOption.Computed)]

public string ItemCategory { get; set; }

}

class ItemData

{

public string Sku { get; set; }

public string Name { get; set; }

public List<Category> Category { get; set; }

}

class Category

{

public string Id { get; set; }

public string Name { get; set; }

}with setup out of the way, let us define a sample entry and write a query to pick all entries that contain a category “Connected Home & Housewares”:

{

"sku": 185267,

"name": "Duracell - D Batteries (4-Pack)",

"type": "HardGood",

"price": 9.99,

"upc": "041333430010",

"category": [

{

"id": "pcmcat312300050015",

"name": "Connected Home & Housewares"

},

{

"id": "pcmcat248700050021",

"name": "Housewares"

},

{

"id": "pcmcat303600050001",

"name": "Household Batteries"

},

{

"id": "abcat0208002",

"name": "Alkaline Batteries"

}

],

"shipping": 5.99,

"description": "Compatible with select electronic devices; D size; DURALOCK Power Preserve technology; 4-pack",

"manufacturer": "Duracell",

"model": "MN1300R4Z",

"url": "http://www.bestbuy.com/site/duracell-d-batteries-4-pack/185267.p?id=1051384046551&skuId=185267&cmp=RMXCC",

"image": "http://img.bbystatic.com/BestBuy_US/images/products/1852/185267_sa.jpg"

}Option one – JsonConvert.Deserialize

The naive approach to the desired outcome would be to simply try and deserialise the field and pray Entity Framework would work its magic:

var context = new SHDbContext();

//var sql = context.WishlistEntries.Select(wle => JsonConvert.DeserializeObject<ItemData>(wle.ItemData)

// .Category.Any(c => c.Name == "Connected Home & Housewares")); //The LINQ expression 'c => c.Name == "Connected Home & Housewares"' could not be translated. Unfortunately, this would not even compile as EF has no idea what to do with JsonConvert. We’ll end up having to materialise the query and handle filtering on the client side:

var data = context.WishlistEntries.Select(wle => JsonConvert.DeserializeObject<ItemData>(wle.ItemData))

.AsEnumerable()

.Where(d => d.Category.Any(c => c.Name == "Connected Home & Housewares"))

.ToList();This will work as expected, and some developers will probably stop here, but this is in fact a time bomb. When our wish lists get big enough, the clients will put a serious strain on SQL Server and the network fetching the whole table in its entirety. Not nice.

Option two – write a Value Converter

Technically this is a variation of the previous option but leveraging EF Core Value Conversion capability for nicer looking query. To enable this, we’ll override OnModelCreating and add a few more lines to DB Context:

class SHDbContext : DbContext

{

public DbSet<WishlistEntry> WishlistEntries { get; set; }

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

{

base.OnConfiguring(optionsBuilder);

optionsBuilder.UseSqlServer(Environment.GetEnvironmentVariable("DB_CONNSTR")).LogTo(Console.WriteLine);

}

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

base.OnModelCreating(modelBuilder);

modelBuilder.Entity<WishlistEntry>()

.Property(e => e.ItemData)

.HasConversion((itemData) => JsonConvert.SerializeObject(itemData), str => JsonConvert.DeserializeObject<ItemData>(str));

}

}

// ...

// finally, use the new converter like so:

var data = context.WishlistEntries.AsEnumerable().Where(d => d.ItemData.Category.Any(c => c.Name == "Connected Home & Housewares")).ToList();

// also not ideal as we still have to filter on client side, effectively scanning all tablThis simply shifts the JSON conversion code up the food chain, so developers won’t see it often. Unfortunately, this “out of sight – out of mind” approach won’t improve our query plan.

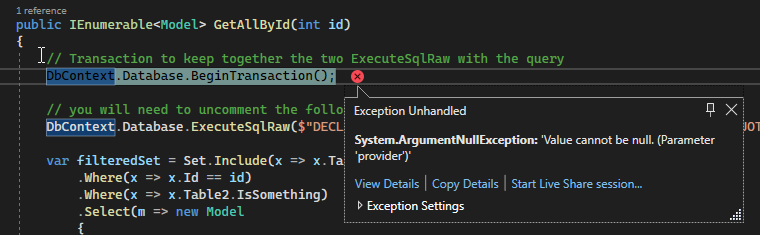

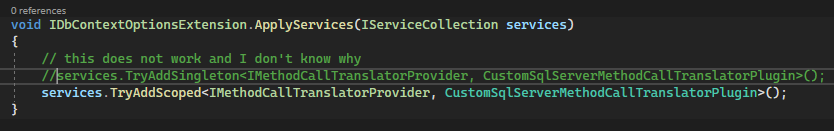

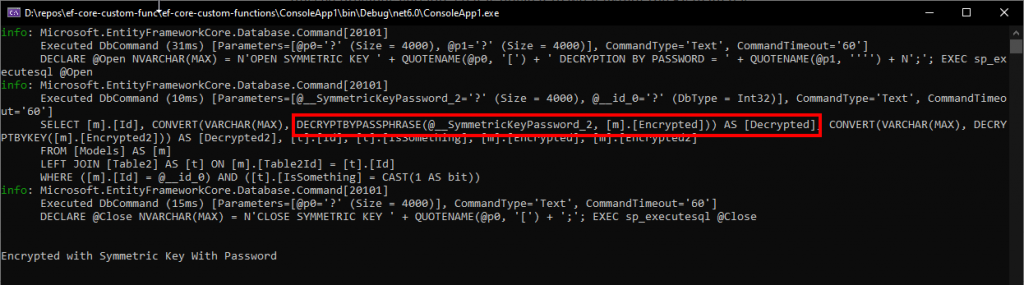

Option three – JSON_VALUE function

It gets pretty clear at this stage that we need to be able to filter by json fields on SQL Server side. Luckily, Microsoft provides a few json-handling capabilities. JSON_VALUE function that allows extracting one value out of the blob via given path selector. This is going to eliminate the need to land our query and should hugely improve performance. Entity Framework does not support this function by default, but it should be very easy to add:

// in the context class

// adding static function to the DB Context

[DbFunction("JSON_VALUE", IsBuiltIn = true, IsNullable = false)]

public static string JsonValue(string expression, string path) => throw new NotImplementedException();our query then becomes something like this:

var data = context.WishlistEntries.Where(d => SHDbContext.JsonValue(d.ItemData, "$.category[0].name").Contains("Connected Home & Housewares")).ToList();And this is the correct answer. However, we can further improve performance by adding an index. Indexing the whole json blob will not help, but we can create a computed column with the value we care about and index that instead:

-- add computed column

ALTER TABLE WishlistEntries ADD ItemCategory AS JSON_VALUE(ItemData, '$.category[0].name')

-- index the value

CREATE INDEX IX_ItemCategory ON WishlistEntries(ItemCategory) INCLUDE(CustomerId, ItemSource, ItemData);

-- finally, test if the query picks up index by running the following and checking the Actual Execution Plan:

/* test queries */

SELECT * FROM [WishlistEntries] WHERE JSON_VALUE(ItemData, '$.category[0].name') = 'Connected Home & Housewares'This enhancement also happens to simplify our code a bit as we can now rely on computed column value when writing queries in EF:

// add computed column to WishlistEntry

class WishlistEntry

{

public int Id { get; set; }

public int CustomerId { get; set; }

[MaxLength(300)]

public string ItemSource { get; set; }

public string ItemData { get; set; }

[DatabaseGenerated(DatabaseGeneratedOption.Computed)]

public string ItemCategory { get; set; }

}

// finally the query will be like:

var data = context.WishlistEntries.Where(wle => wle.ItemCategory == "Connected Home & Housewares").ToList();

Conclusion and area for improvement

Keen eyed readers may have noticed that we hardcoded query to first array item. This was a deliberate simplification we opted for to illustrate the point. It should be possible to query an array using OPENJSON … CROSS APPLY like so, but we haven’t explored this avenue far enough to see if we’d ultimately succeed:

var data = context.WishlistEntries.FromSqlRaw(@"SELECT TOP 1 wishlist.*

FROM [WishlistEntries] as wishlist

CROSS APPLY OPENJSON(ItemData, '$.category') WITH (CatName VARCHAR(200) '$.name') as categories

WHERE categories.CatName = {0}", "Connected Home & Housewares").ToList();

Even though our application is far from complete we are in a good place. Equipped with these techniques, we can handle almost anything users throw at us and are still able to query data fast enough. The code for this exercise is available on GitHub.