We often get to come in, deploy cloud services for customers and get out. Some customers have established teams and processes, others have green fields and rely on us to do the right thing. Regardless of the level of investment, customers expect us to stick to the best practice and not only create bits of cloud infrastructure for them but also do the right thing and codify the infrastructure as much as possible. By default, we’d stick to Terraform for that.

Storing state

To be able to manage infrastructure and detect changes, Terraform needs a place to store current state of affairs. The easiest solution would be to store the state file locally but that’s not really an option for CI/CD pipelines. Luckily, we’ve got a bunch of backends to pick from.

This, however, leads to a chicken and egg situation where we can’t use Terraform to deploy storage backend without having access to storage backend where it can keep state file.

Bicep

So far, we’ve been mostly dealing with Azure so it made sense to prep a quick Bicep snippet to create required resources for us. One thing to keep in mind is the fact that Bicep by default deploys resources into resourceGroup scope. This implies we’ve already created a resource group, which is not exactly what we want to do. To switch it up we need to start at subscription level (this is what we are usually given, anyway) and create a resource group followed by whatever else we wanted. The recommended way to do that would be to declare main template for RG and reference a module with all other good stuff:

targetScope = 'subscription' // switching scopes here

// declaring some parameters so we can easier manage the pipeline later

@maxLength(13)

@minLength(2)

param prefix string

param tfstate_rg_name string = '${prefix}-terraformstate-rg'

@allowed([

'australiaeast'

])

param location string

// creating resource group

resource rg 'Microsoft.Resources/resourceGroups@2021-01-01' = {

name: tfstate_rg_name

location: location

}

// Deploying storage account via module reference

module stg './tfstate-storage.bicep' = {

name: 'storageDeployment'

scope: resourceGroup(rg.name)

params: {

storageAccountName: '${prefix}statetf${take(uniqueString(prefix),4)}'

location: location

}

}

the module code would be important here:

param storageAccountName string

param location string

param containerName string = 'tfstate'

output storageAccountName string = storageAccountName

output containerName string = containerName

resource storageAccount_resource 'Microsoft.Storage/storageAccounts@2021-06-01' = {

name: storageAccountName

location: location

sku: {

name: 'Standard_LRS'

}

kind: 'StorageV2'

properties: {

allowBlobPublicAccess: true

networkAcls: {

bypass: 'AzureServices'

virtualNetworkRules: []

ipRules: []

defaultAction: 'Allow'

}

supportsHttpsTrafficOnly: true

encryption: {

services: {

blob: {

keyType: 'Account'

enabled: true

}

}

keySource: 'Microsoft.Storage'

}

accessTier: 'Hot'

}

}

resource blobService_resource 'Microsoft.Storage/storageAccounts/blobServices@2021-06-01' = {

parent: storageAccount_resource

name: 'default'

properties: {

cors: {

corsRules: []

}

deleteRetentionPolicy: {

enabled: false

}

}

}

resource storageContainer_resource 'Microsoft.Storage/storageAccounts/blobServices/containers@2021-06-01' = {

parent: blobService_resource

name: containerName

properties: {

immutableStorageWithVersioning: {

enabled: false

}

defaultEncryptionScope: '$account-encryption-key'

denyEncryptionScopeOverride: false

publicAccess: 'None'

}

}

Assuming we just want to chuck all our assets into a repository and drive from there, it’d make sense to also write a simple ADO deployment pipeline. Previously we’d have to opt for AzureCLI task and do something like this:

- task: AzureCLI@2

inputs:

azureSubscription: $(azureServiceConnection)

scriptType: bash

scriptLocation: inlineScript

inlineScript: |

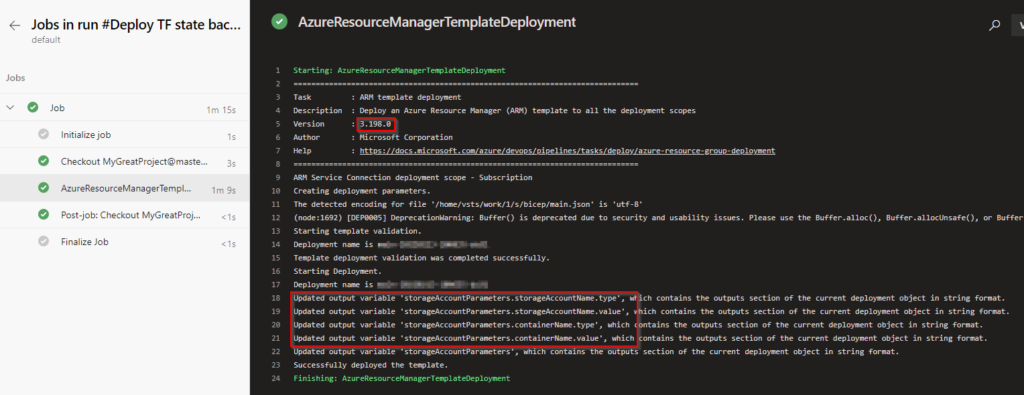

# steps to create RG

az deployment group create --resource-group $(resourceGroupName) --template-file bicep/main.bicepLuckily, the work has been done and starting with agent version 3.199, AzureResourceManagerTemplateDeployment does support Bicep deployments natively! Unfortunately, at the time of testing our ADO-hosted agent was still at version 3.198 so we had to cheat and compile Bicep down to ARM manually. The final pipeline, however, would look something like this:

trigger: none # intended to run manually

name: Deploy TF state backend via Bicep

pool:

vmImage: 'ubuntu-latest'

variables:

- group: "bootstrap-state-variable-grp" # define variable groups to point to correct subscription

steps:

- task: AzureResourceManagerTemplateDeployment@3

inputs:

deploymentScope: 'Subscription'

azureResourceManagerConnection: $(azureServiceConnection)

subscriptionId: $(targetSubscriptionId)

location: $(location)

templateLocation: 'Linked Artifact'

csmFile: '$(System.DefaultWorkingDirectory)/bicep/main.bicep' # on dev machine, compile into ARM (az bicep build --file .\bicep\main.bicep) and use that instead until agent gets update to 3.199.x

deploymentMode: 'Incremental'

deploymentOutputs: 'storageAccountParameters'

overrideParameters: '-prefix $(prefix) -location $(location)'

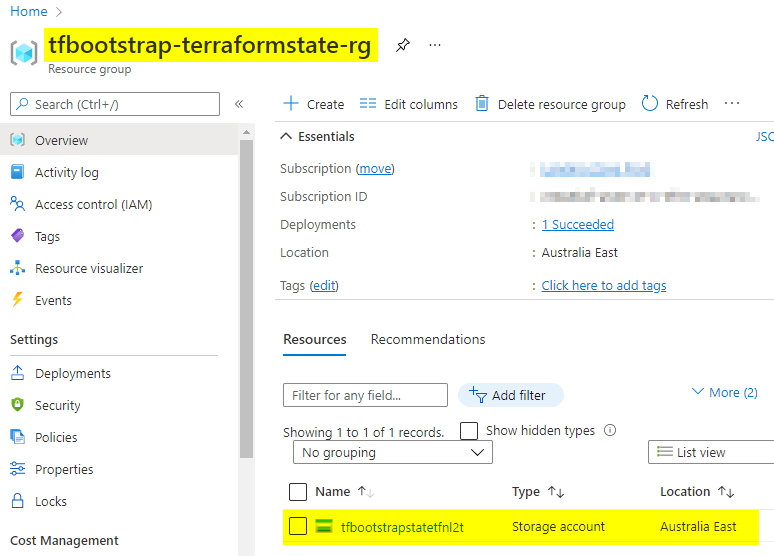

Running through ADO should yield us a usable storage account within a brand-new resource group:

Where to from here

Having dealt with foundations, we should be able to capture output of this step (we mostly care about storage account name as it’s got some randomness in it) and feed it to Terraform backend provider. We’ll cover it in the next part of this series.

Conclusion

Existing solutions in this space have so far relied on either PowerShell or az cli to do the job. That’s still doable but can get a bit bulky, especially if we want to query outputs. Now that Bicep support is landing in AzureResourceManagerTemplateDeploymentV3 directly, we will likely see this as a recommended approach.