Getting Blazor AuthorizeView to work with Azure Static Web App

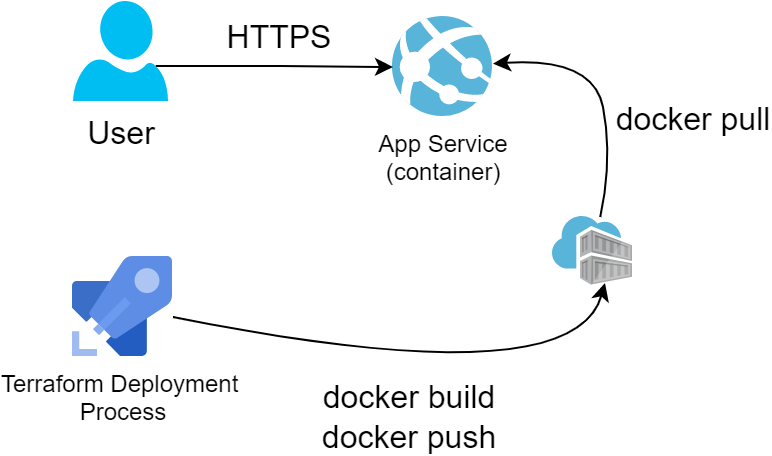

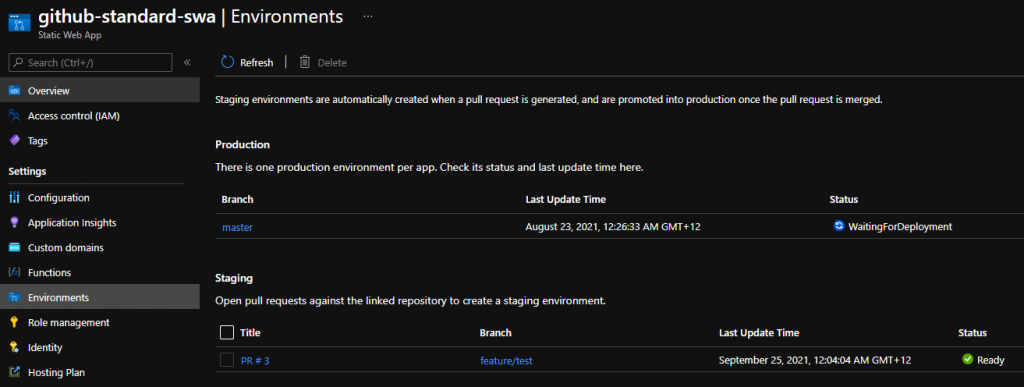

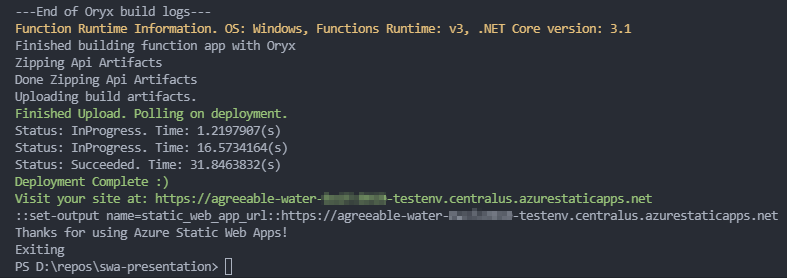

Recently, we inherited an Azure Static Web App project with a Blazor WASM frontend. The previous developer had given up on configuring the built-in authentication that comes bundled with Static Web Apps and was about to ditch the whole platform and rewrite the API backend for ASP.NET and App Services. This would have meant we could use ASP.NET Membership and be in full control of the user lifecycle. At the same time, we would have implemented our own user management layer which would be redundant in our case. We would also have missed features like AzureAD Auth and a user invite system that we get for free with SWA.

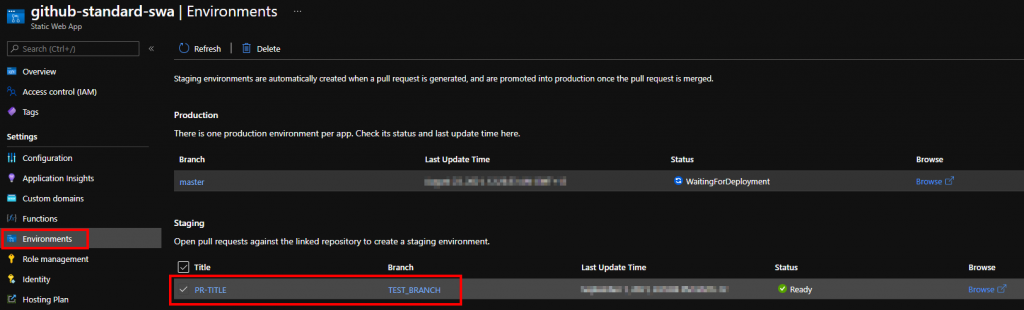

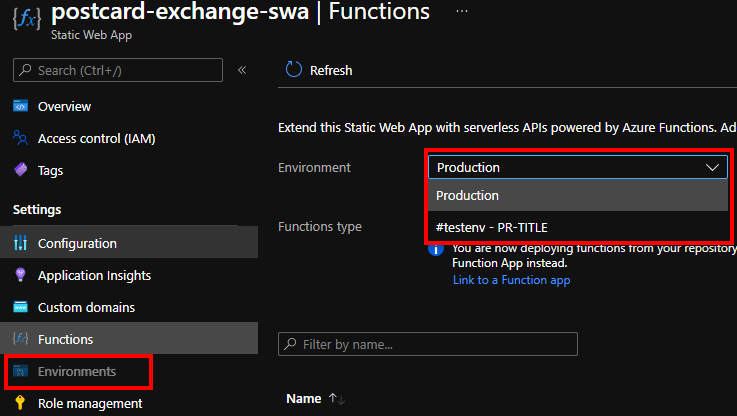

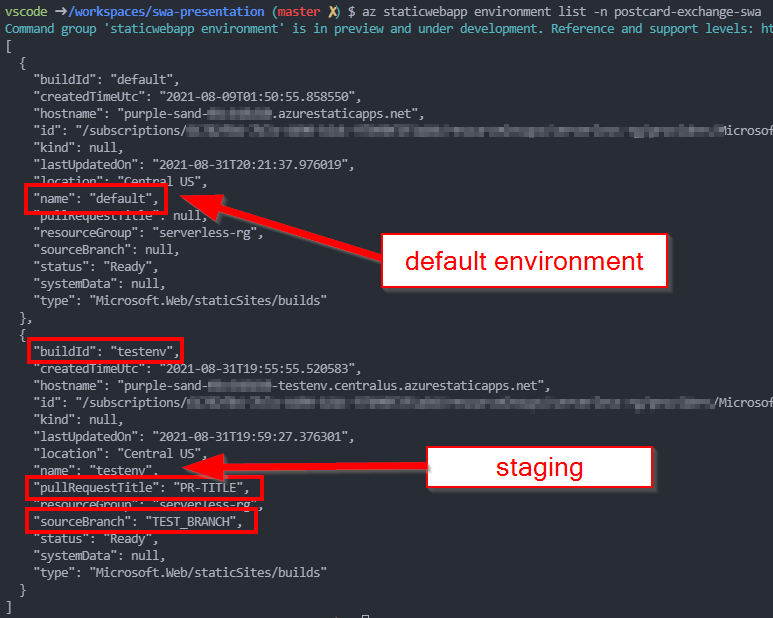

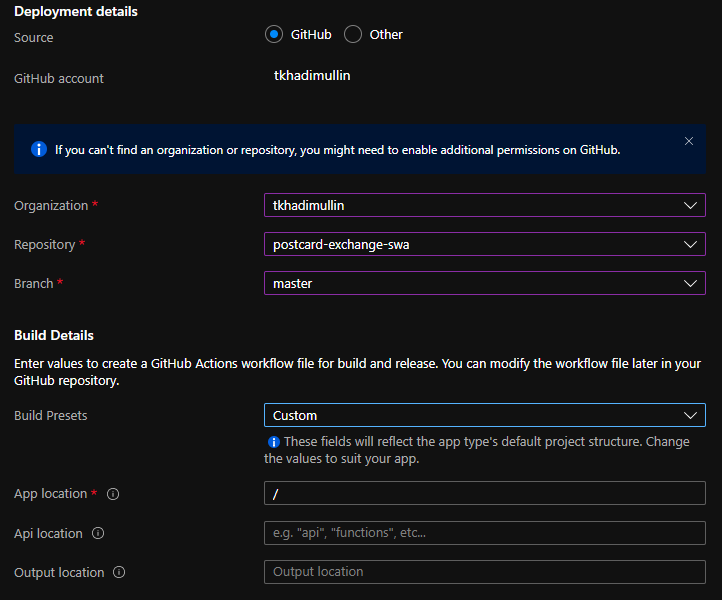

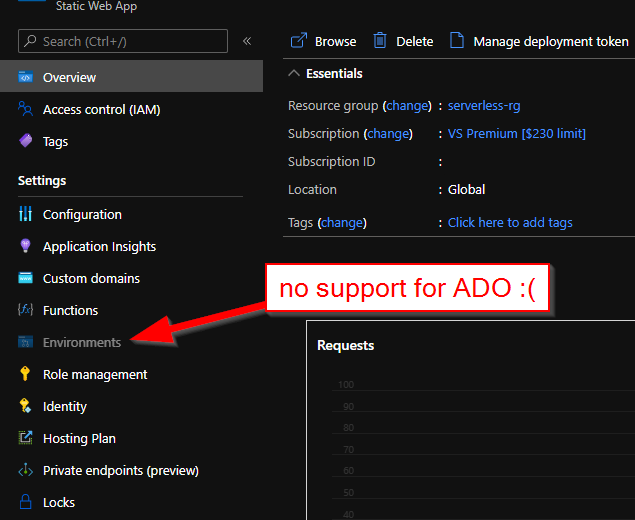

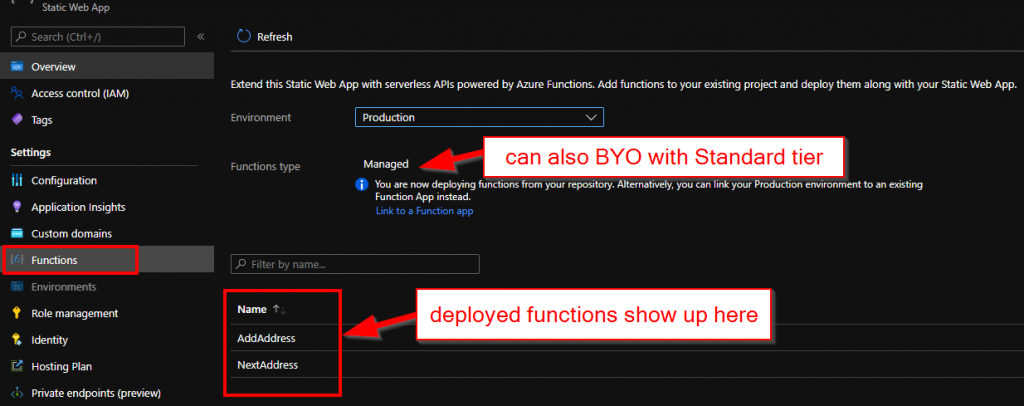

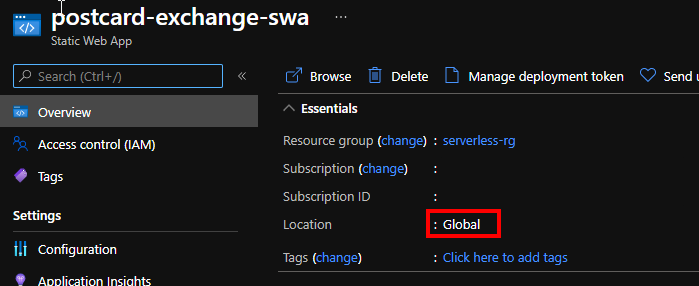

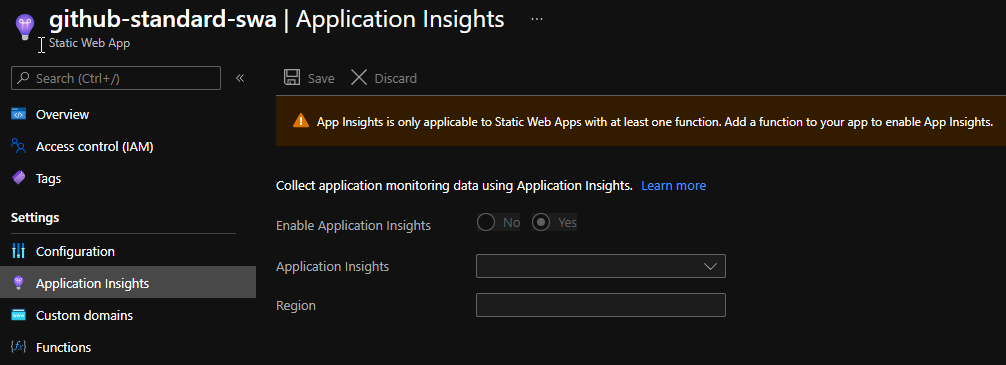

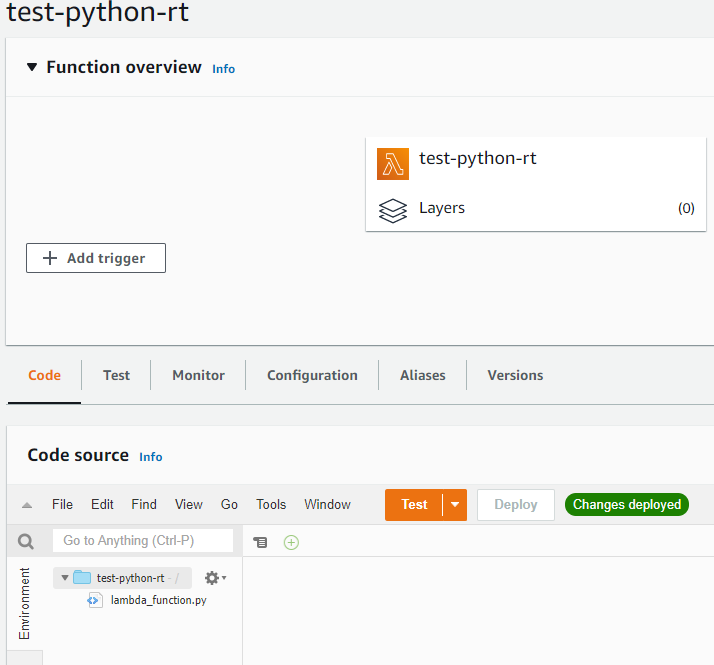

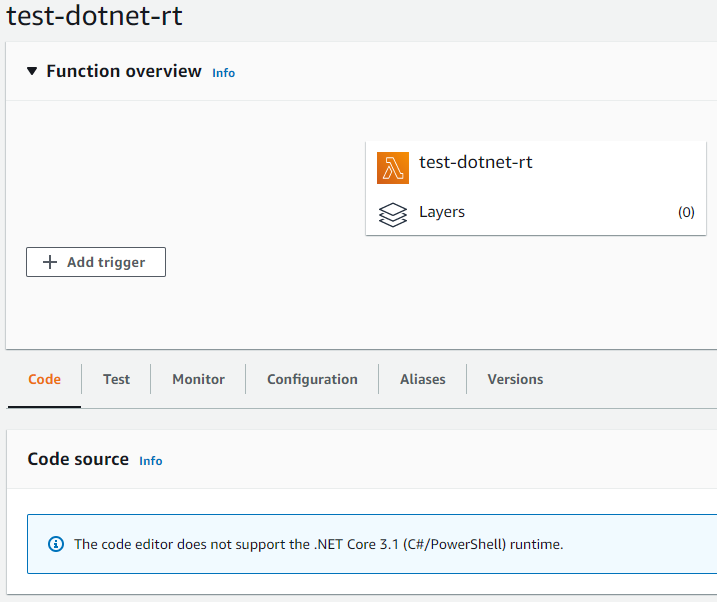

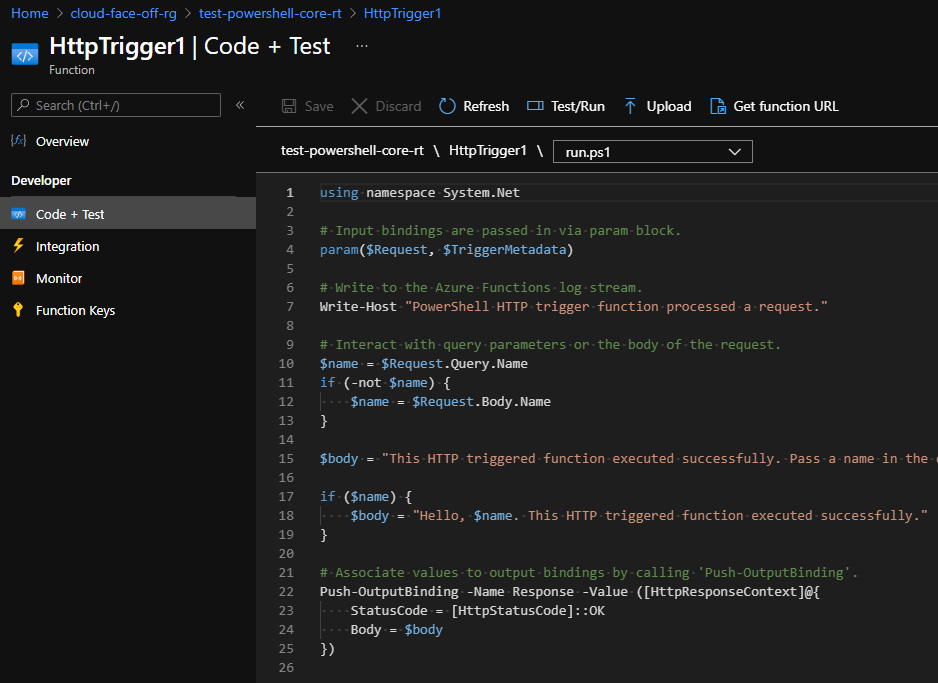

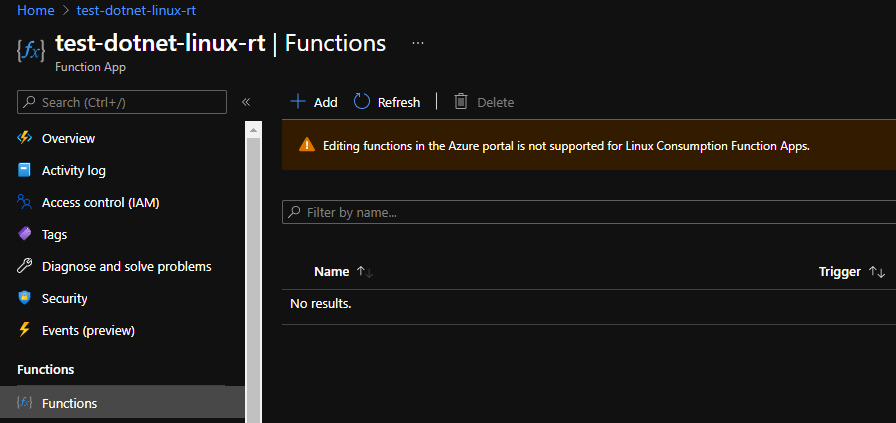

Inventory inspection

Ultimately, we have two puzzle pieces here: Static Web Apps authentication and Blazor.

Azure Static Web Apps provide built-in authentication and authorization for web applications. This allows users to authenticate with their preferred identity provider such as Azure Active Directory, GitHub, Twitter, Facebook, and Google, to access resources on the app. When a user logs in, Azure Static Web Apps takes care of tokens and exposes an API that returns user information and authentication status in a simple JSON format:

{

"identityProvider": "github",

"userId": "d75b260a64504067bfc5b2905e3b8182",

"userDetails": "username",

"userRoles": ["anonymous", "authenticated"],

"claims": [{

"typ": "name",

"val": "Azure Static Web Apps"

}]

}

All that we care about here is the fact that the userRoles property is provided for both the API and frontend via an /.auth/me endpoint.

Moving on to the consumer side, Blazor offers the AuthorizeView component to show content only to authorized users. When an unauthorized user tries to access a page, Blazor will render the contents of the NotAuthorized tag, which is likely going to point to a login page. Decision on whether a given user is authorized to see a page is delegated to the AuthenticationStateProvider service. Default implementation plugs into ASP.NET membership, which is exactly what we’re trying to avoid.

Making changes

Luckily, writing a custom Provider and injecting it instead of the stock one is a matter of configuring the DI container at startup:

var builder = WebAssemblyHostBuilder.CreateDefault(args);

builder.Services.AddAuthorizationCore();

builder.Services.AddScoped<AuthenticationStateProvider, CustomAuthStateProvider>();The provider would then look something like this:

public class SwaPrincipalResponse

{

public ClientPrincipal? ClientPrincipal { get; set; }

}

public class AuthStateProvider : AuthenticationStateProvider

{

private readonly HttpClient _httpClient;

private readonly AuthenticationState _anonymous;

public AuthStateProvider(HttpClient httpClient)

{

_httpClient = httpClient;

_anonymous = new AuthenticationState(new ClaimsPrincipal(new ClaimsIdentity()));

}

public override async Task<AuthenticationState> GetAuthenticationStateAsync()

{

var principalResponse = await _httpClient.GetStringAsync("/.auth/me");

var kv = JsonSerializer.Deserialize<SwaPrincipalResponse>(principalResponse, new JsonSerializerOptions { PropertyNameCaseInsensitive = true });

var principal = kv!.ClientPrincipal;

if (principal == null || string.IsNullOrWhiteSpace(principal.IdentityProvider))

return _anonymous;

principal.UserRoles = principal.UserRoles?.Except(new[] { "anonymous" }, StringComparer.CurrentCultureIgnoreCase).ToList();

if (!principal.UserRoles?.Any() ?? true)

{

return _anonymous;

}

var identity = new ClaimsIdentity(principal.IdentityProvider);

identity.AddClaim(new Claim(ClaimTypes.NameIdentifier, principal.UserId));

identity.AddClaim(new Claim(ClaimTypes.Name, principal.UserDetails));

identity.AddClaims(principal!.UserRoles!.Select(r => new Claim(ClaimTypes.Role, r)));

return new AuthenticationState(new ClaimsPrincipal(identity));

}

}And this unlocks pages with AuthorizeView for us.

In conclusion, if you’re working with a Blazor frontend and Azure Static Web Apps, take advantage of the built-in Azure SWA Authentication for Blazor. It can save you from having to rewrite your API backend and allows for easy integration with various identity providers.