Okay, despite roaring success we had with the previous attempt at this, setting up VS Code dev containers for AWS SAM proved to be quite a bit of a pain. And we’re still not sure if it’s worth it. But it was interesting to set up and may be useful in some circumstances, so here we go.

Some issues we ran into

The biggest issue by far was the fact that SAM heavily relies on containers which for us means we’ll have to go deeper and use docker-in-docker dev container as a starting point. The base image there comes with bare minimum software and dotnet SDK is not part of it. So, we’ll have to install everything ourselves:

#!/usr/bin/env bash

set -e

if [ "$(id -u)" -ne 0 ]; then

echo -e 'Script must be run as root. Use sudo, su, or add "USER root" to your Dockerfile before running this script.'

exit 1

fi

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

rm -rf ./aws

rm ./awscliv2.zip

echo "AWS CLI version `aws --version`"

curl -L "https://github.com/aws/aws-sam-cli/releases/latest/download/aws-sam-cli-linux-x86_64.zip" -o "aws-sam-cli-linux-x86_64.zip"

unzip aws-sam-cli-linux-x86_64.zip -d sam-installation

sudo ./sam-installation/install

echo "SAM version `sam --version`"

rm -rf ./sam-installation

rm ./aws-sam-cli-linux-x86_64.zip

wget https://packages.microsoft.com/config/debian/11/packages-microsoft-prod.deb -O packages-microsoft-prod.deb

sudo dpkg -i packages-microsoft-prod.deb

rm packages-microsoft-prod.deb

sudo apt-get update; \

sudo apt-get install -y apt-transport-https && \

sudo apt-get update && \

sudo apt-get install -y dotnet-sdk-3.1

# Installing lambda tools was required to get lambda to work while I was testing different approaches. It may have become redundant after so many iterations and changes to the script, but probably does not hurt

dotnet tool install -g Amazon.Lambda.Tools

export PATH="$PATH:$HOME/.dotnet/tools"This is fairly straightforward: install AWS CLI and SAM as described in the documentation, and then install dotnet SDK. All we need to do now, is call it from the main Dockerfile.

It also helps to pre-populate container with extensions we’re going to need anyway:

"extensions": [

"ms-azuretools.vscode-docker",

"amazonwebservices.aws-toolkit-vscode",

"ms-dotnettools.csharp",

"redhat.vscode-yaml",

"zainchen.json" // this probably can be removed

],Debugging experience

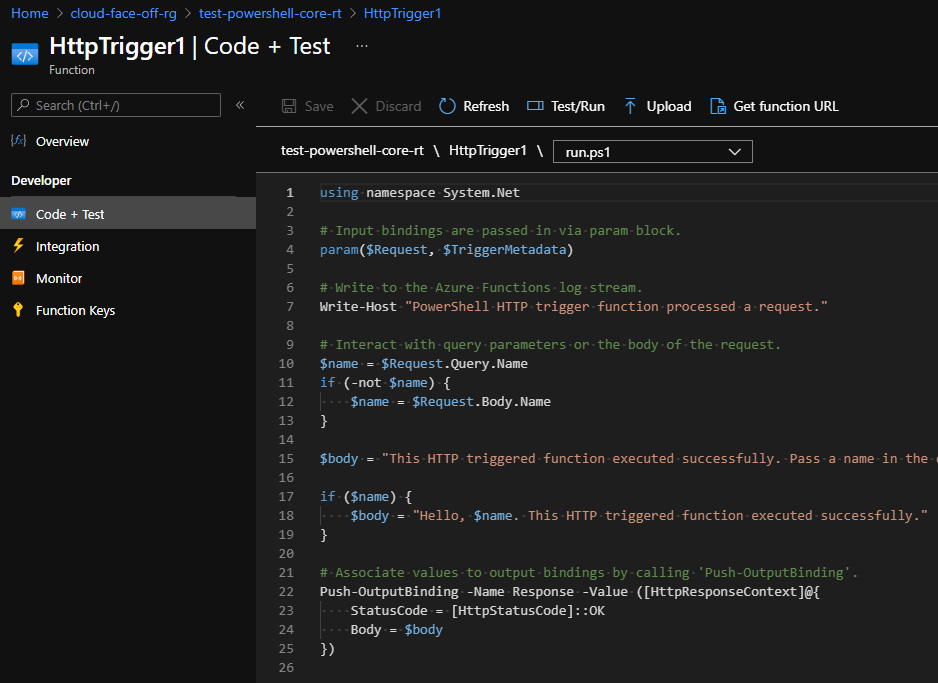

Apparently debugging AWS Lambda is slightly different from Azure functions in a sense that it’s not intended for invocation from a browser but rather accepts an event via built-in dispatcher. We could potentially spend more time on it and get it to work with browsers but that looked good enough for the first stab.

Building up the winning sequence

With all of the above in mind we ended up with roughly the following sequence to get debugging to work:

- started with modified Docker-in-Docker template and added all tools

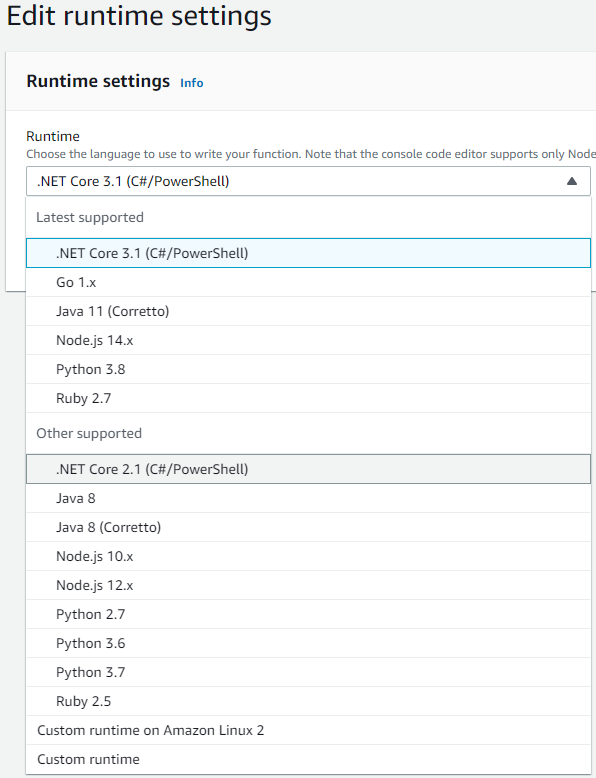

- opened the container up and used AWS extension to generate lambda skeleton app (after a couple of failed attempts we settled on dotnetcore3.1 (image) template)

- we then let OmniSharp run, pick up all C# projects and restore packages

- after that we rebuilt container to reinitialise extensions and make sure we’re starting off afresh

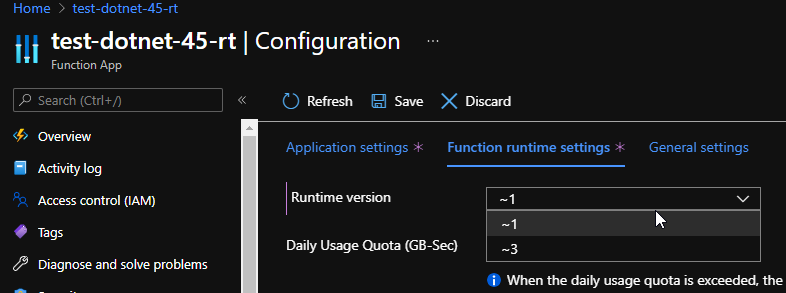

- Once we reopened the container, we use AWS extension again to generate launch configuration (it is important to let SAM know what version of dotnet we’re going to need. check out

launch.jsonto verify) - And finally, we run it

Action!

As always, code is in Github.