We love nice dashboards. And if you see a chart somewhere on a webpage – chances are it runs D3.js. D3.js is a JavaScript library for manipulating documents based on data. It allows you to do a great deal of visualisation but comes with a bit of a learning curve. Even though the data can come in any shape and form, plotting and transformations are JavaScript.

Declarative approach

This is where Vega comes forward. Everything is now a JSON therefore we can literally build and ship visualisations without touching JavaScript at all!

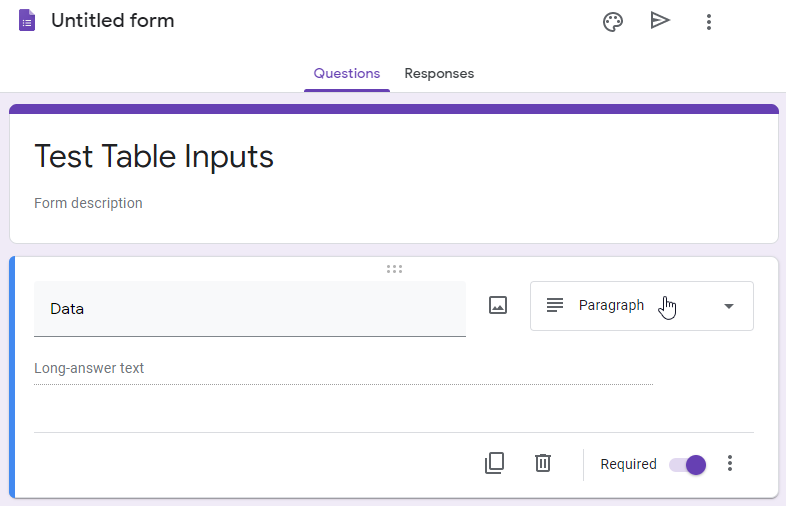

Step by step

Suppose we’ve got hierarchical animal data represented by following JSON:

"values": [

{"id": "1", "parent": null, "title": "Animal"},

{"id": "2", "parent": "1", "title": "Duck"},

{"id": "3", "parent": "1", "title": "Fish"},

{"id": "4", "parent": "1", "title": "Zebra"}

]What we can then do is to lay the nodes out in a tree-like shape (stratify does the job):

"transform": [

{

"type": "stratify",

"key": "id",

"parentKey": "parent"

},

{

"type": "tree",

"method": "tidy",

"separation": true,

"size": [{"signal": "width"}, {"signal": "height"}]

}

]having laid out the nodes, we need to generate connecting lines, treelinks + linkpath combo does exactly that:

{

"name": "links",

"source": "tree", // take datasource "tree" as input

"transform": [

{ "type": "treelinks" }, // apply transform 1

{ "type": "linkpath", // follow up with next transform

"shape": "diagonal"

}

]

}now that we’ve got our data sources, we want to draw actual objects. In Vega these are called marks. For simplicity I’m only drawing one rectangle with a title for each data point and some basic lines to connect:

"marks": [

{

"type": "path",

"from": {"data": "links"}, // dataset we defined above

"encode": {

"enter": {

"path": {"field": "path"} // linkpath generated a dataset with "path" field in it - we just grab it here

}

}

},

{

"type": "rect",

"from": {"data": "tree"},

"encode": {

"enter": {

"stroke": {"value": "black"},

"width": {"value": 100},

"height": {"value": 20},

"x": {"field": "x"},

"y": {"field": "y"}

}

}

},

{

"type": "text",

"from": {"data": "tree"}, // use data set we defined earlier

"encode": {

"enter": {

"stroke": {"value": "black"},

"text": {"field": "title"}, // we can use data fields to display actual values

"x": {"field": "x"}, // use data fields to draw values from

"y": {"field": "y"},

"dx": {"value":50}, // offset the mark to appear in rectangle center

"dy": {"value":13},

"align": {"value": "center"}

}

}

}

]All in all we arrived at a very basic hierarchical chart. It looks kinda plain and can definitely be improved: the rectangles there should probably be replaced with groups and connection paths will need some work too.

{

"$schema": "https://vega.github.io/schema/vega/v5.json",

"width": 800,

"height": 300,

"padding": 5,

"data": [

{

"name": "tree",

"values": [

{"id": "1", "parent": null, "title": "Animal"},

{"id": "2", "parent": "1", "title": "Duck"},

{"id": "3", "parent": "1", "title": "Fish"},

{"id": "4", "parent": "1", "title": "Zebra"}

],

"transform": [

{

"type": "stratify",

"key": "id",

"parentKey": "parent"

},

{

"type": "tree",

"method": "tidy",

"separation": true,

"size": [{"signal": "width"}, {"signal": "height"}]

}

]

},

{

"name": "links",

"source": "tree",

"transform": [

{ "type": "treelinks" },

{ "type": "linkpath",

"shape": "diagonal"

}

]

},

{

"name": "tree-boxes",

"source": "tree",

"transform": [

{

"type": "filter",

"expr": "datum.parent == null"

}

]

},

{

"name": "tree-circles",

"source": "tree",

"transform": [

{

"type": "filter",

"expr": "datum.parent != null"

}

]

}

],

"marks": [

{

"type": "path",

"from": {"data": "links"},

"encode": {

"enter": {

"path": {"field": "path"}

}

}

},

{

"type": "rect",

"from": {"data": "tree-boxes"},

"encode": {

"enter": {

"stroke": {"value": "black"},

"width": {"value": 100},

"height": {"value": 20},

"x": {"field": "x"},

"y": {"field": "y"}

}

}

},

{

"type": "symbol",

"from": {"data": "tree-circles"},

"encode": {

"enter": {

"stroke": {"value": "black"},

"width": {"value": 100},

"height": {"value": 20},

"x": {"field": "x"},

"y": {"field": "y"}

}

}

},

{

"type": "rect",

"from": {"data": "tree"},

"encode": {

"enter": {

"stroke": {"value": "black"},

"width": {"value": 100},

"height": {"value": 20},

"x": {"field": "x"},

"y": {"field": "y"}

}

}

},

{

"type": "text",

"from": {"data": "tree"},

"encode": {

"enter": {

"stroke": {"value": "black"},

"text": {"field": "title"},

"x": {"field": "x"},

"y": {"field": "y"},

"dx": {"value":50},

"dy": {"value":13},

"align": {"value": "center"}

}

}

}

]

}Getting a bit fancier

Suppose, we would like to render different shapes for root and leaf nodes of our chart. One way to achieve this will be to add two filter transformations based on your tree dataset and filter them accordingly:

{

"name": "tree-boxes",

"source": "tree", // grab the existing data

"transform": [

{

"type": "filter",

"expr": "datum.parent == null" // run it through a filter defined by expression

}

]

},

{

"name": "tree-circles",

"source": "tree",

"transform": [

{

"type": "filter",

"expr": "datum.parent != null"

}

]

}then instead of rendering all marks as rect we’d want two different shapes for respective transformed datasets:

{

"type": "rect",

"from": {"data": "tree-boxes"},

"encode": {

"enter": {

"stroke": {"value": "black"},

"width": {"value": 100},

"height": {"value": 20},

"x": {"field": "x"},

"y": {"field": "y"}

}

}

},

{

"type": "symbol",

"from": {"data": "tree-circles"},

"encode": {

"enter": {

"stroke": {"value": "black"},

"width": {"value": 100},

"height": {"value": 20},

"x": {"field": "x"},

"y": {"field": "y"}

}

}

}Demo time

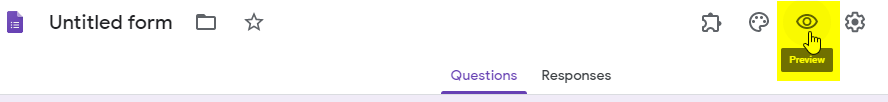

Play with Vega in live editor here.