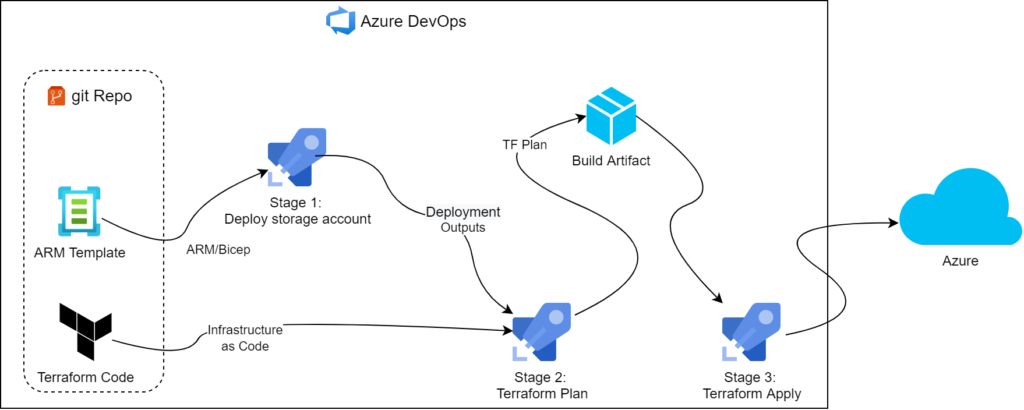

We have hit this snag while building our zero touch Terraform pipeline. And while the Internet has examples of navigating the issue with PowerShell, we could not find one that would work with Bash. Technically, PowerShell 7.x comes preinstalled on our choice of Hosted Agents too, so we could’ve used existing solution. But we felt it was a good opportunity to look at Logging Commands and ultimately could not pass up an opportunity to build something new.

Problem statement

Suppose we’ve got an Azure Resource Group Deployment task (which now supports Bicep templates natively by the way). It’s got a way of feeding deployment outputs back to the pipeline: deploymentOutputs which takes a string and returns it as a variable:

trigger: none

name: ARM Deploy

pool:

vmImage: 'ubuntu-latest'

stages:

- stage: arm_deployment

jobs:

- job: deploy

steps:

- task: AzureResourceManagerTemplateDeployment@3

inputs:

deploymentScope: 'Subscription'

azureResourceManagerConnection: $(predefinedAzureServiceConnection)

subscriptionId: $(targetSubscriptionId)

location: $(location)

csmFile: '$(Build.SourcesDirectory)/arm-template.json'

deploymentOutputs: 'outputVariablesGoHere' # this is where ARM outputs will go

- script: |

echo $ARM_DEPLOYMENT_OUTPUT

env:

ARM_DEPLOYMENT_OUTPUT: $(outputVariablesGoHere)

Let us assume our ARM template has outputs along the following lines:

"outputs": {

"resourceGroupName": {

"type": "string",

"value": "[parameters('rg_name')]"

},

"storageAccountName": {

"type": "string",

"value": "[reference(extensionResourceId(format('/subscriptions/{0}/resourceGroups/{1}', subscription().subscriptionId, parameters('rg_name')), 'Microsoft.Resources/deployments', 'deployment')).outputs.storageAccountName.value]"

}

}

then, the pipeline would produce the following output:

Starting: AzureResourceManagerTemplateDeployment

==============================================================================

...

Starting Deployment.

Updated output variable 'outputVariablesGoHere.storageAccountName.type', which contains the outputs section of the current deployment object in string format.

Updated output variable 'outputVariablesGoHere.storageAccountName.value', which contains the outputs section of the current deployment object in string format.

...

Updated output variable 'outputVariablesGoHere', which contains the outputs section of the current deployment object in string format.

Finishing: AzureResourceManagerTemplateDeployment

Starting: CmdLine

==============================================================================

...

Script contents:

echo $ARM_DEPLOYMENT_OUTPUT

========================== Starting Command Output ===========================

{"storageAccountName":{"type":"String","value":"xxxxxxxxx"},"resourceGroupName":{"type":"String","value":"xxxxxxxx"}}

Finishing: CmdLine

ADO does not support parsing JSON

By default, ADO would treat the whole object as one string and would not get us very far with it. So, we need to parse JSON and define more variables. We could opt for PowerShell task to do that, but since we’re using Ubuntu on our agents, we felt Bash would be a bit more appropriate. Let’s update the pipeline a bit and replace our simplistic echo script with a bit more logic:

- script: |

echo "##vso[task.setvariable variable=resourceGroupName;isOutput=true]`echo $ARM_DEPLOYMENT_OUTPUT | jq -r '.resourceGroupName.value'`"

echo "##vso[task.setvariable variable=storageAccountName;isOutput=true]`echo $ARM_DEPLOYMENT_OUTPUT | jq -r '.storageAccountName.value'`"

echo "##vso[task.setvariable variable=containerName;isOutput=true]`echo $ARM_DEPLOYMENT_OUTPUT | jq -r '.containerName.value'`"

echo "##vso[task.setvariable variable=storageAccessKey;isOutput=true;isSecret=true]`echo $ARM_DEPLOYMENT_OUTPUT | jq -r '.storageAccessKey.value'`"

env:

ARM_DEPLOYMENT_OUTPUT: $(outputVariablesGoHere)

Here we pass our input to jq, the JSON parser that comes preinstalled with ubuntu-latest. Then we craft a string that ADO Agent picks up and interprets as command (in this case, setting pipeline variable). These special strings are called Logging Commands.

One crucial thing to remember here is to call jq with --raw-output/-r command line parameter – this would ensure resulting strings are unquoted. Having \"value\" vs value can easily break the build and is awfully hard to troubleshoot.

Conclusion

This little script is just a sample of what’s possible. PowerShell examples online usually opt for a universal approach and enumerate all keys on the object. We’re certain Bash can offer the same flexibility but since our use case was limited by just a couple of variables, we’d keep it straight to the point and leave generalisation to readers.